LLMs are incredibly versatile but difficult to evaluate comprehensively.

Human beliefs about LLMs shape how they're used and evaluated.

We often assume AI capabilities based on limited information.

Mismatched expectations can lead to AI failures and user frustration.

Alignment with human expectations is crucial for AI success.

Understanding human generalization is key to developing more effective AI.

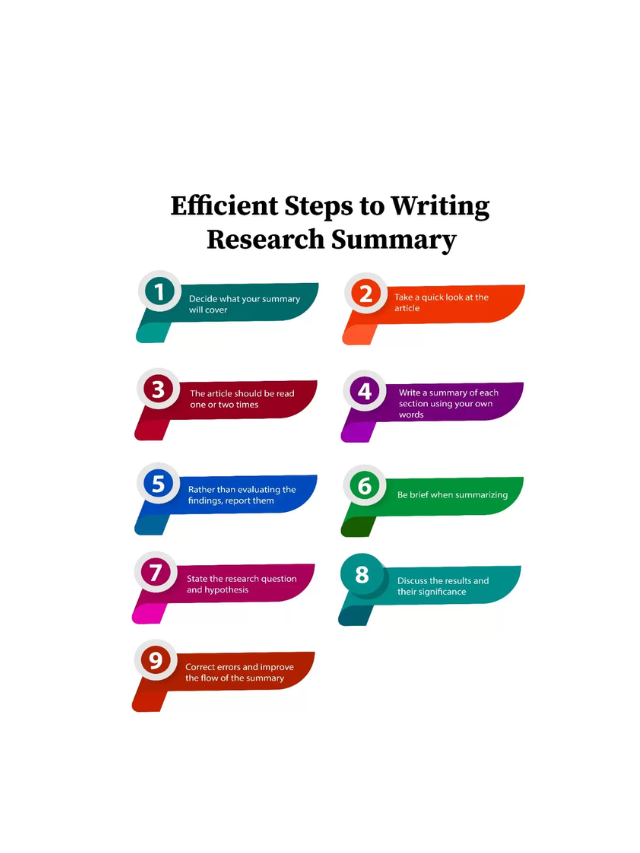

Summarize the main points of the research.